In 2011, as part of Hack.Art.Lab, I collaborated with composer Mary Ellen Childs and percussionist Michael Holland to create live animation triggered by live performance of Mary Ellen Childs’ composition “Still Life.”

Video courtesy of Wichita State University Media Resources Center

We analyzed the piece into 11 sections and created algorithmic video triggered by sound and motion to match each of the 11 sections. The video was projected in front of the players on a semi-transparent scrim.

The algorithms generating the video were statistical as opposed to deterministic. In addition, the performers themselves by nature will never perform the work identically between two performances. These two aspects guarantee that every performance of the piece will generate a unique and unrepeatable set of video during the performance.

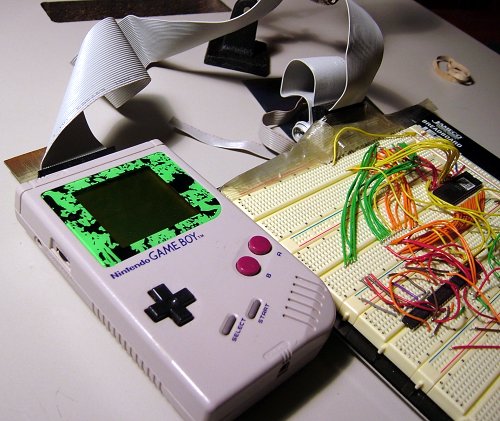

We attached infrared LEDs to the performers’ percussion sticks so they could be tracked in real-time by 3 modified video cameras. From a high level I controlled the animation during the performance by tracking the section change and pressing the appropriate button on my custom software.